Jun Xia is currently a Postdoctoral Research Associate at University of Notre Dame from 2023 (advised by Prof. Yiyu Shi). He obtained his Ph.D. degree from East China Normal University in 2023 (supervised by Prof. Mingsong Chen). He obtained his M.S. degree and B.S. degree from Jiangnan University (supervised by Prof. Zhilei Chai and Prof. Wei Yan from Peking University) and Hainan University in 2019 and 2016, respectively.

His research interest includes Heterogeneous On-device Federated Learning and Trustworthy AI.

🔥 News

- 2024.12: 🎉🎉 A NAIRR is funded by NSF (cash equivalent $98,400).

- 2024.12: 🎉🎉 A paper is accepted by T-SUSC 2024.

- 2024.12: 🎉🎉 A paper is accepted by DATE 2025.

- 2024.09: 🎉🎉 A paper is accepted by NeurIPS 2024.

- 2024.07: 🎉🎉 A paper is accepted by ICCAD 2024.

- 2024.02: 🎉🎉 A paper is accepted by DAC 2024.

📝 Selected Publications

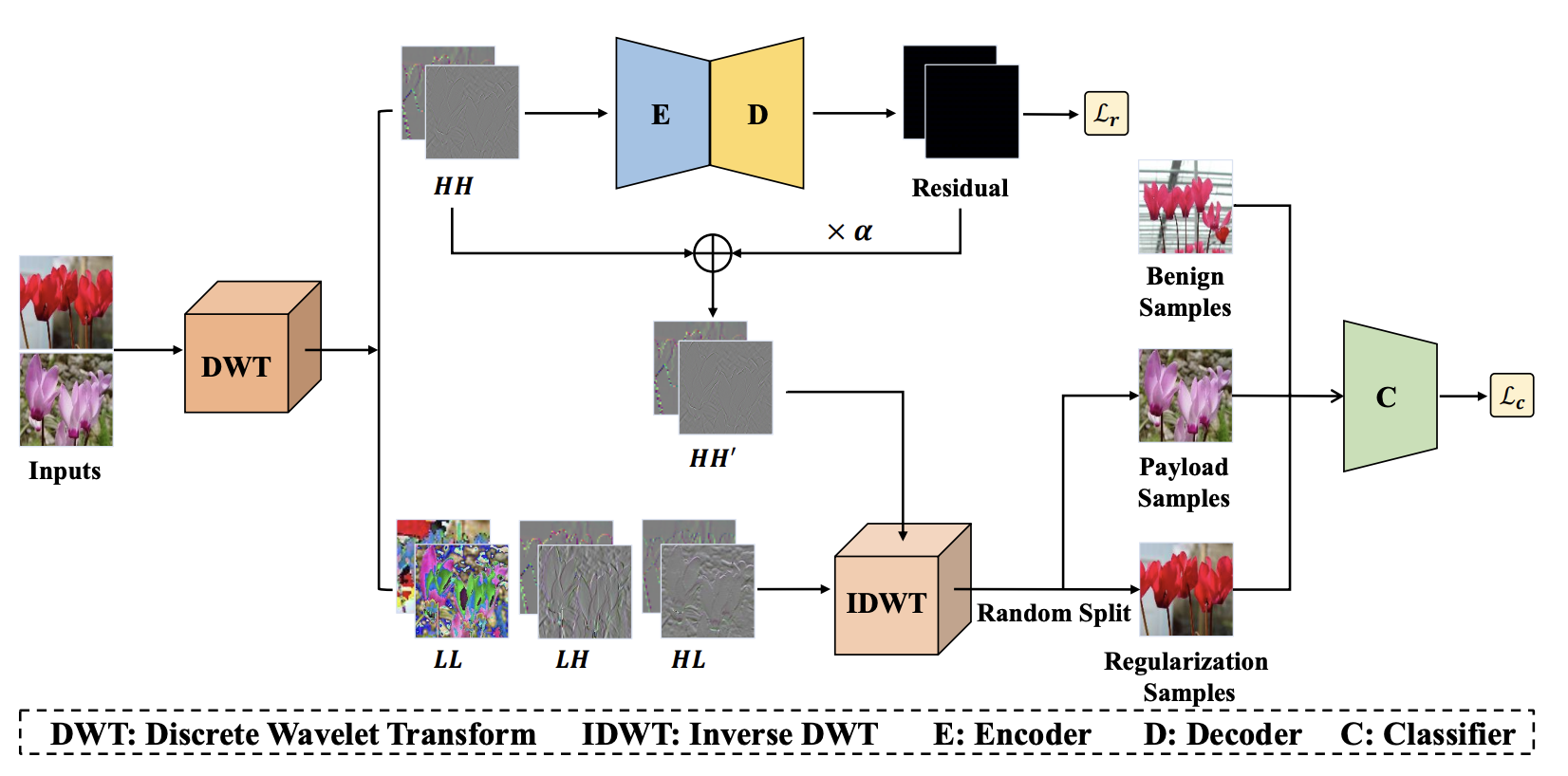

WaveAttack: Asymmetric Frequency Obfuscation-based Backdoor Attacks Against Deep Neural Networks (Machine Learning Top Conference, CCF-A, Acceptance Ratio: 25%)

Jun Xia, Zhihao Yue, Yingbo Zhou, Zhiwei Ling, Yiyu Shi, Xian Wei, Mingsong Chen

- We obtain image high-frequency features through Discrete Wavelet Transform (DWT) to generate backdoor triggers.

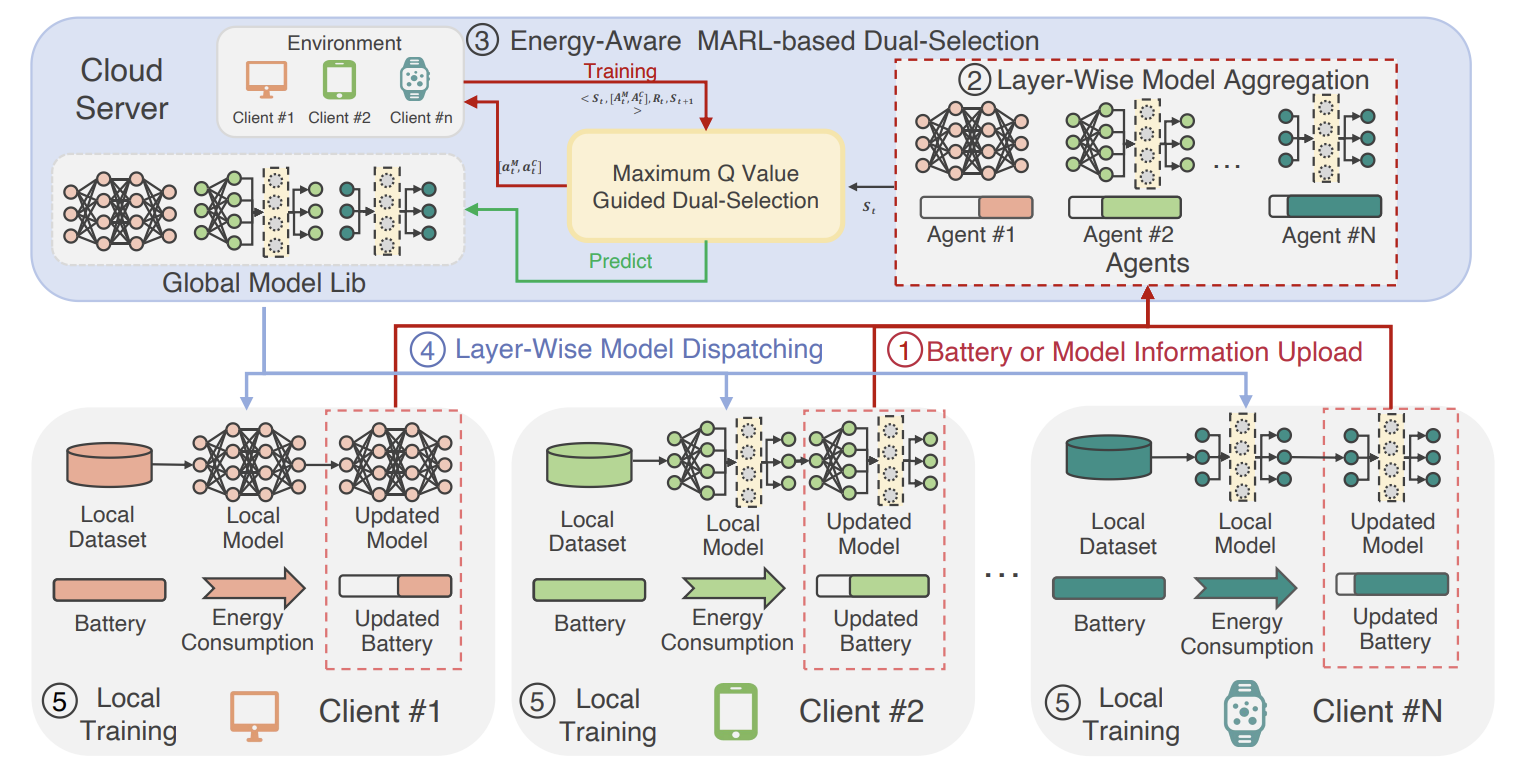

Towards Energy-Aware Federated Learning via MARL: A Dual-Selection Approach for Model and Client (EDA Top Conference, CCF-B, Acceptance Ratio: 24%)

Jun Xia, Yi Zhang, Yiyu Shi

- We propose an energy-aware FL framework named DR-FL, which considers the energy constraints in both clients and heterogeneous deep learning models to enable energy-efficient FL. Unlike Vanilla FL, DR-FL adopts our proposed Muti-Agents Reinforcement Learning (MARL)-based dual-selection method, which allows participated devices to make contributions to the global model effectively and adaptively based on their computing capabilities and energy capacities in a MARL-based manner.

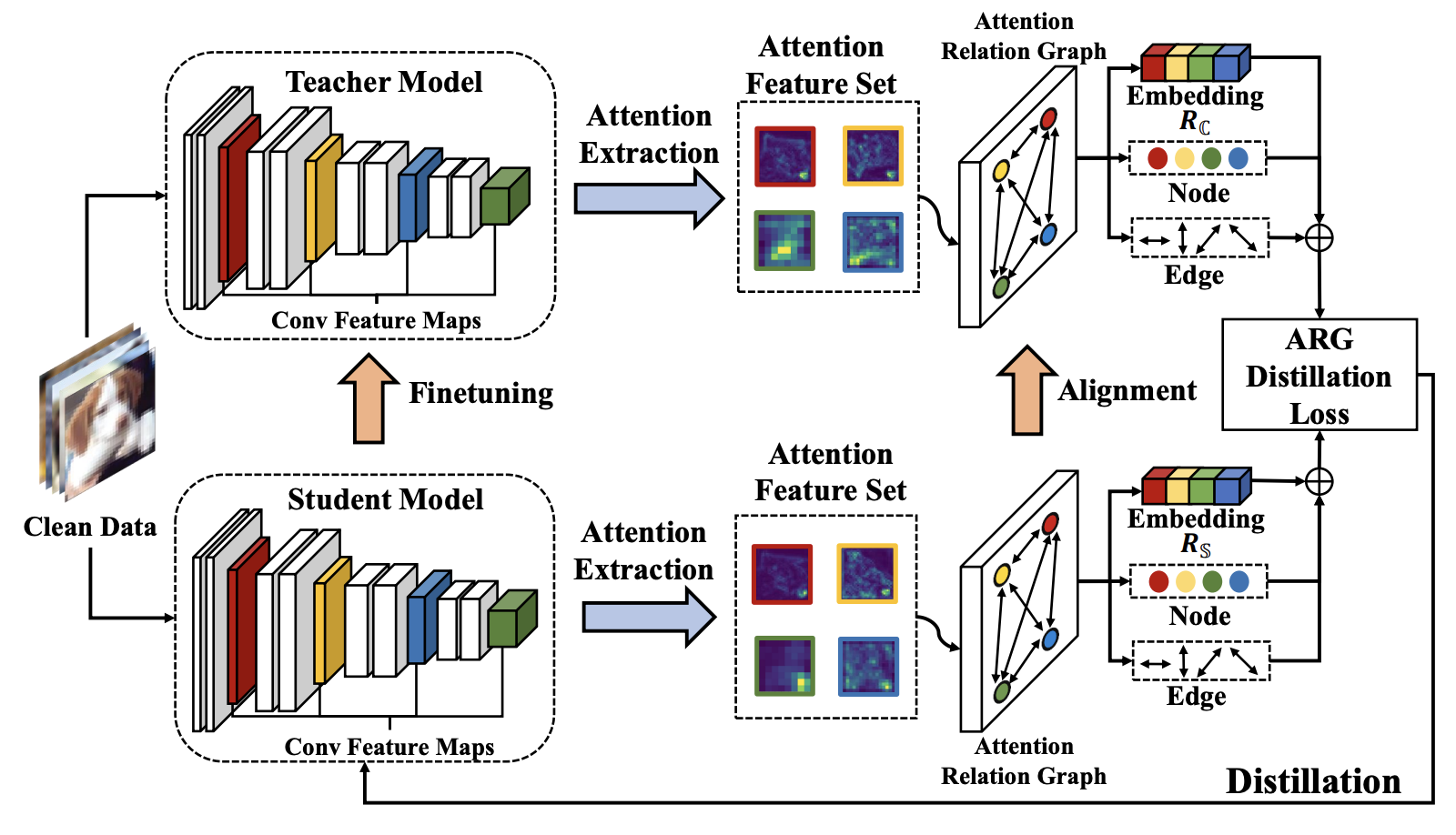

Eliminating backdoor triggers for deep neural networks using attention relation graph distillation (AI Top Conference, CCF-A, Acceptance Ratio: 14%)

Jun Xia, Ting Wang, Jiepin Ding, Xian Wei, Mingsong Chen

- We introduce a novel backdoor defense framework named Attention Relation Graph Distillation (ARGD), which fully explores the correlation among attention features with different orders using our proposed Attention Relation Graphs (ARGs). Based on the alignment of ARGs between both teacher and student models during knowledge distillation, ARGD can eradicate more backdoor triggers than NAD.

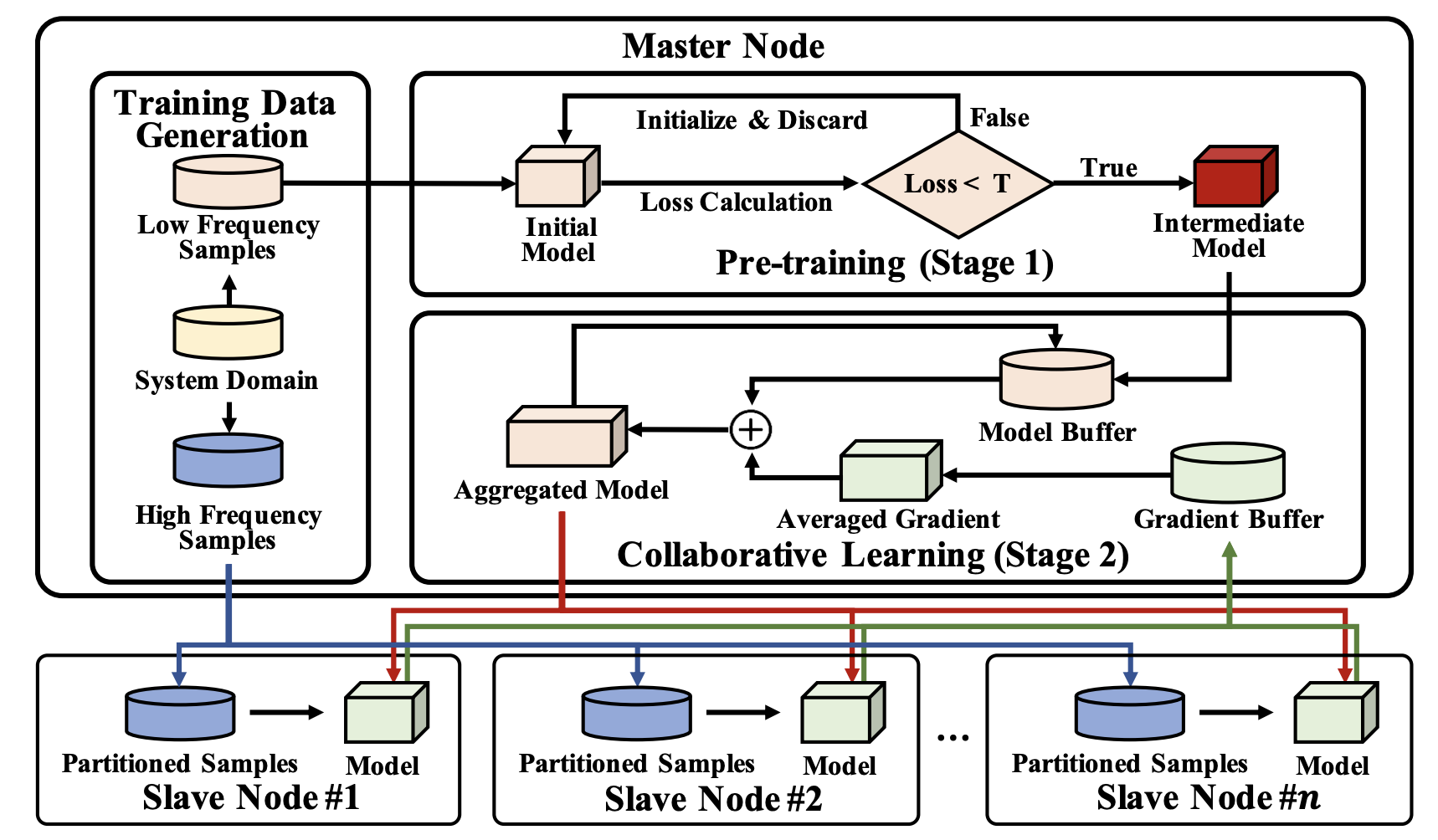

Accelerated synthesis of neural network-based barrier certificates using collaborative learning (EDA Top Conference, CCF-A, Acceptance Ratio: 23%)

Jun Xia, Ming Hu, Xin Chen, Mingsong Chen

- We fully exploit the parallel processing capability of underlying hardware to enable quick search for a barrier certificate.

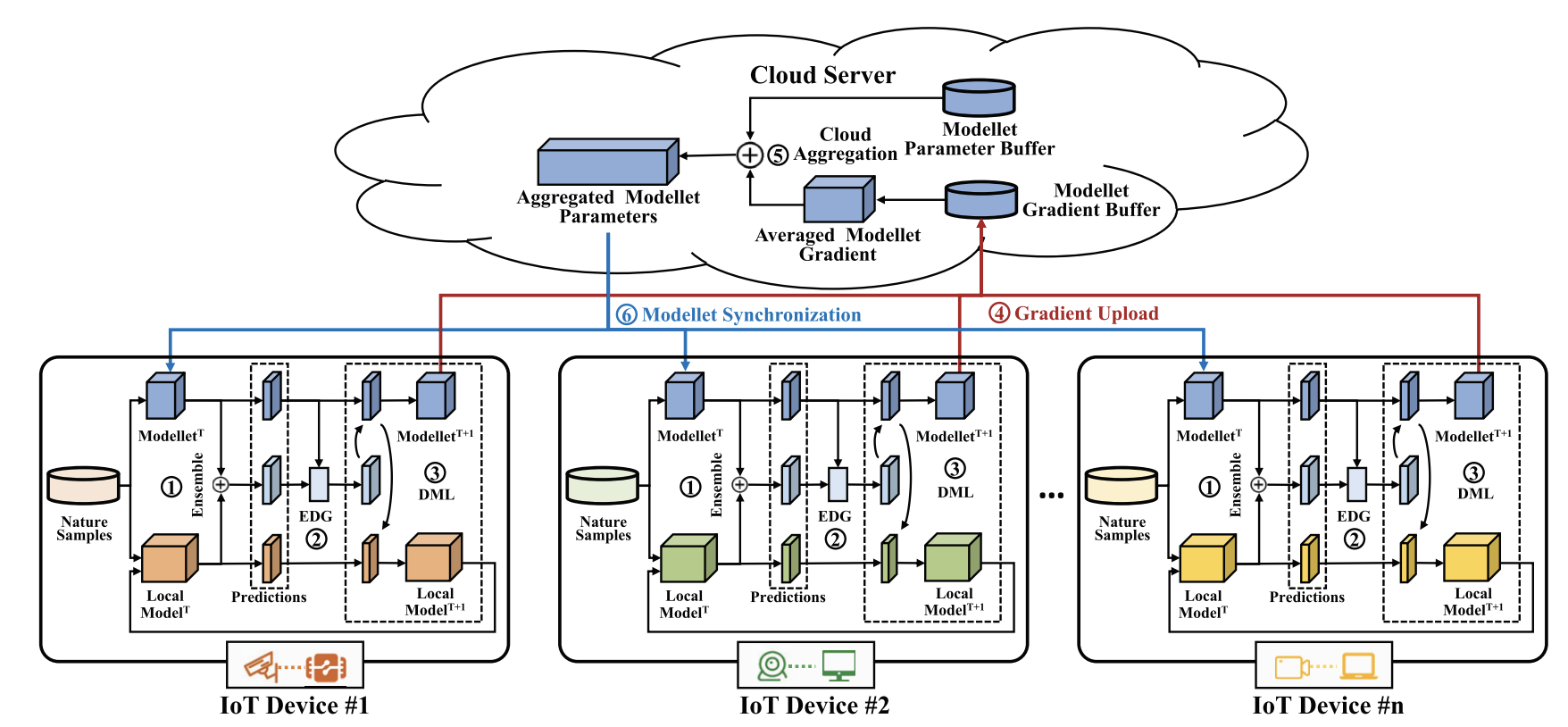

PervasiveFL: Pervasive federated learning for heterogeneous IoT systems (EDA Top Journal, CCF-A, Acceptance Ratio: 23%)

Jun Xia, Tian Liu, Zhiwei Ling, Ting Wang, Xin Fu, Mingsong Chen

- we propose a novel framework named PervasiveFL that enables efficient and effective FL among heterogeneous IoT devices. Without modifying original local models, PervasiveFL installs one lightweight NN model named modellet on each device. By using the deep mutual learning (DML) and our entropy-based decision gating (EDG) method, modellets and local models can selectively learn from each other through soft labels.

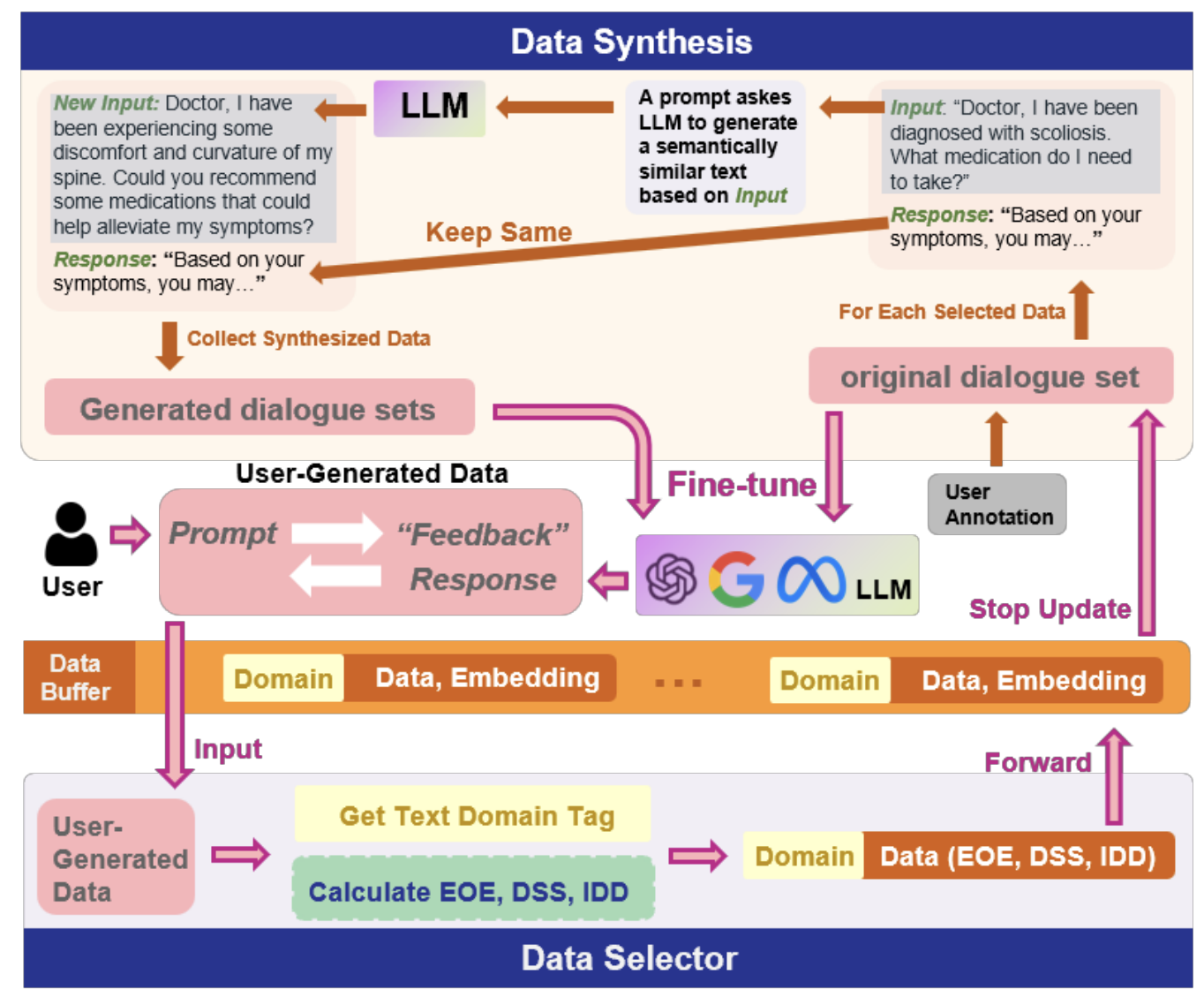

Enabling On-Device Large Language Model Personalization with Self-Supervised Data Selection and Synthesis(EDA Top Conference, CCF-A iter,, Acceptance Ratio: 25%)

Ruiyang Qin, Jun Xia, Zhenge Jia, Meng Jiang, Ahmed Abbasi, Peipei Zhou, Jingtong Hu, Yiyu Shi

- In this paper, we propose a novel framework to select and store the most representative data online in a self-supervised way. Such data has a small memory footprint and allows infrequent requests of user annotations for further fine-tuning.

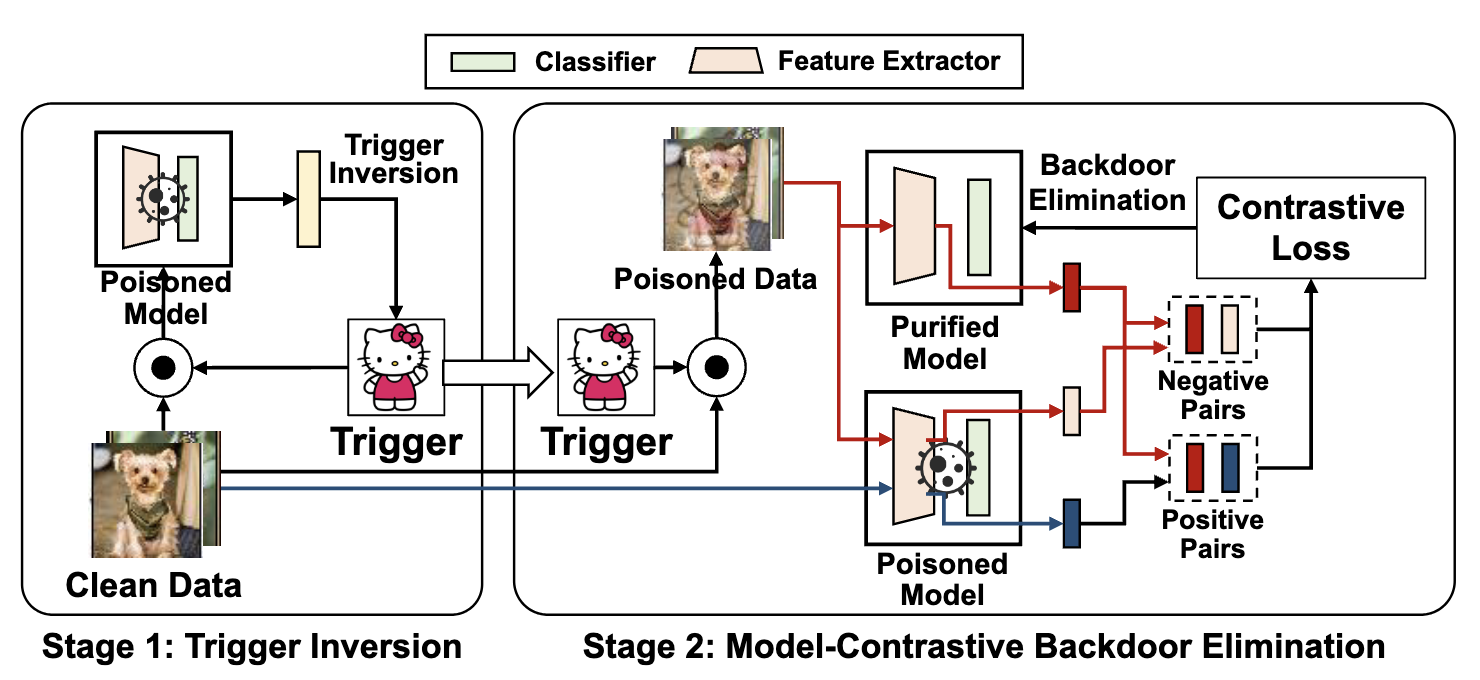

Model-Contrastive Learning for Backdoor Elimination (Multi-Media Top Conference, CCF-A, Acceptance Ratio: 25%)

Zhihao Yue, Jun Xia, Zhiwei Ling, Ming Hu, Ting Wang, Xian Wei, Mingsong Chen

- In this paper, we propose a novel two-stage backdoor defense method, named MCLDef, based on Model-Contrastive Learning (MCL). MCLDef can purify the backdoored model by pulling the feature representations of poisoned data towards those of their clean data counterparts.

🎖 Honors and Awards

- 2024.12 The National Artificial Intelligence Research Resource (NAIRR) Pilot Award (PI; First Year; Cash Equivalent $98,400; Hope to see you in Washington, 2025.2.19 - 2.21).

- 2023.03 China National Scholarship ($4280)

- 2023.06 Shanghai Outstanding Graduates

- 2022.06 PhD Outstanding Program (ECNU) PI ($4280)

- 2021.06 Outstanding Student (ECNU)

📖 Educations

- 2023.09 - 2026.09 (Expected), University of Notre Dame, Department of CSE, Postdoc Research Fellow, Advisor: Prof. Yiyu Shi.

- 2019.09 - 2023.06 (PhD), East China Normal University, Department of Software Engineering, Software Engineering, A-level subject rating. Supervisor: Prof. Mingsong Chen.

- 2016.09 - 2019.06 (Master), Jiangnan University, Department of Internet of Things, Computer Science. Supervisor: Prof. Zhilei Chai.

💬 Invited Talks

- 2024.10, ICCAD 2024 Session Chair.

- 2022.12, East China Normal University.

💻 Research Internships

- 2016.12 - 2019.06, Peking University, Supervisor: Prof. Wei Yan.

📖 Teachings

- 2025 Spring, Official Instructor (Experimental Part), CSE 60685, University of Notre Dame

- 2024 Spring, TA, CSE 60685, University of Notre Dame